Most tools are simple. You use them. They do what they’re meant to. That’s it.

AI doesn’t stay in the background like that.

Once you bring it into a space where people gather, whether to talk, read, share or just feel connected, you’re not just adding a feature. You’re shaping the structure. You’re making decisions about what gets seen, what gets missed and what kind of behaviour feels rewarded. Often without noticing.

It rarely feels like a turning point. A smarter feed here. A quicker reply there. A notification that arrives right on time. But these things add up. Over time, the system starts setting the pace for how content moves and how people do too.

That’s where trust begins to shift.

Not because something breaks. But because something quiet changes. People see less. They say less. And slowly, without being told, they adjust.

Oren Etzioni said it simply:

“AI is a tool. The choice about how it gets deployed is ours.”

That choice doesn’t usually come with a big moment. It’s not a launch or an announcement. It’s quiet. It shows up in defaults, what’s turned on, what’s tracked, what gets shown first. Little things that either keep the human layer intact or slowly wear it down.

And in a community, where presence matters more than performance, the margin for getting that wrong is smaller than most people think.

Where AI is already shaping community platforms

Most people won’t describe a platform as “AI-powered.” That’s not how they experience it. They’ll say it feels relevant. That the timing’s good. That replies show up quickly. Or they’ll admit, more quietly, they’re not sure why they’re seeing what they’re seeing.

That’s usually the tell. Not the branding or the interface but the quiet feeling that something’s working behind the scenes, something you didn’t configure but that seems to be choosing on your behalf.

And that’s where the shift begins.

In most community platforms today, especially the ones trying to grow, AI is already built into the infrastructure. Not in obvious ways. It’s more like plumbing.

It ranks. It filters. It nudges.

It drafts responses. Flags tone. Times reminders.

None of it feels dramatic in isolation. But over time, these small adjustments start to shape how people show up and who sticks around.

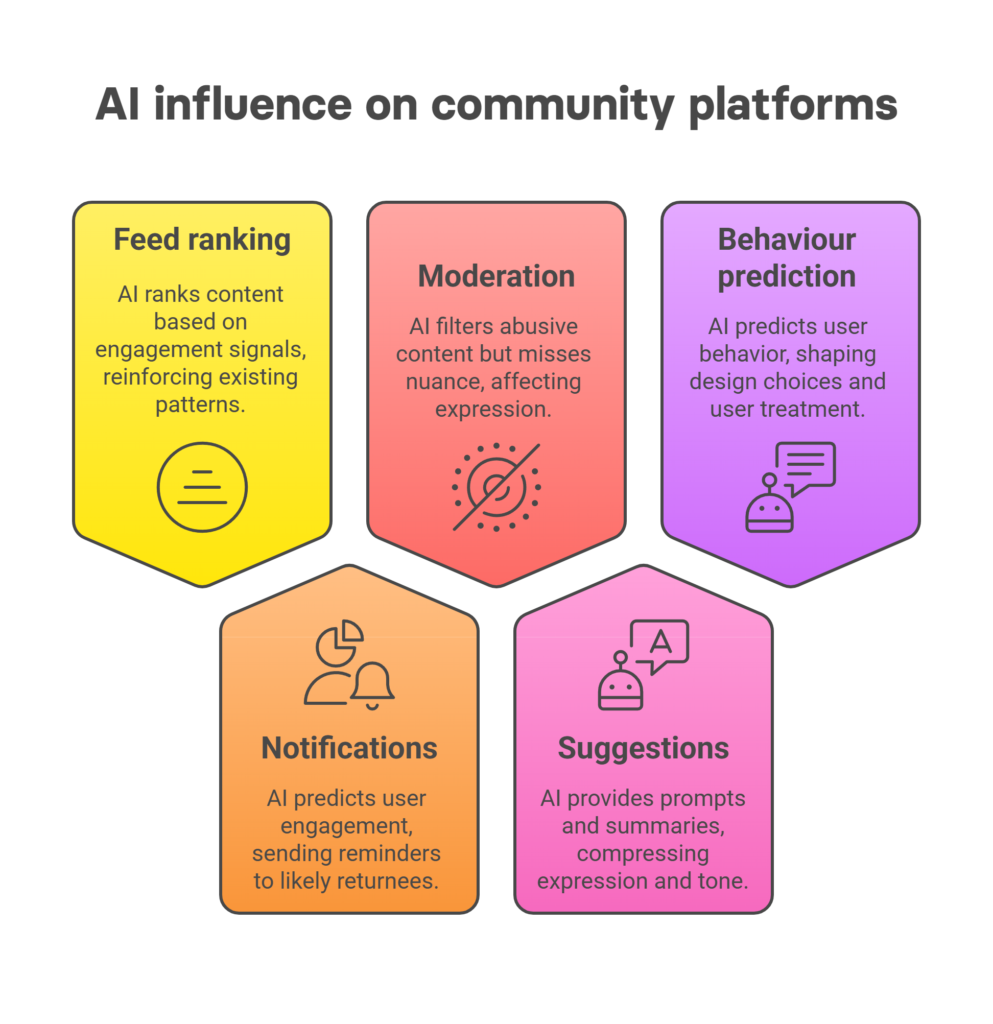

Here’s where that influence shows up most clearly:

Feed ranking

What appears first in your feed isn’t random. It’s based on signals; clicks, recency, engagement history, even who you tend to agree with. The model makes a guess about what’s relevant. But someone, somewhere, decided what relevance should mean.

And once that gets locked in, the system starts reinforcing the same patterns: the same voices, the same rhythms. The stuff that doesn’t fit starts falling through.

Notifications

Who gets nudged and when, is usually driven by a prediction. If the system thinks you’re likely to return, you get a reminder. If not, maybe nothing.

It’s efficient from a numbers point of view. But it also means that re-engagement becomes something you have to earn by already looking engaged. That’s not how real community works.

Moderation

AI does a reasonable job filtering out abusive content. But it often misses nuance. Sarcasm, frustration or culturally specific language can get flagged or demoted.

That doesn’t just affect what’s removed, it changes what people choose to say at all. If a system smooths everything, people start smoothing themselves too.

Suggestions

Reply prompts. Drafts. Summaries. They help. But they also compress expression. You start seeing the same tone. The same phrasing. Even the same patterns of politeness.

At first it feels efficient. Later, it feels flat. Like people have stopped speaking in their own voice.

Behaviour prediction

The system watches what users do. It tries to guess who’s going to churn, who might convert, who should get a nudge. That logic quickly starts shaping design choices. And once you’re optimising for likelihood, not intent, people get treated like probabilities, not participants.

Someone flagged as “unlikely” might stop getting help before they even had a chance.

And what this means for trust

None of this feels like harm in the moment. But it creates drift. The system starts changing who gets seen. Who gets supported. Who gets a second chance. And it does it quietly, inside choices no one remembers making, running on logic most users never agreed to.

That’s why the real question isn’t whether to use AI. It’s: Where is it already deciding things? And what kind of community is it quietly shaping?

What gets lost when you stop looking

The thing about these systems is, they rarely break. They just drift.

In the beginning, things feel smoother. Engagement goes up. Threads stay on track. Replies arrive faster. Everything looks fine, maybe even better. But the longer you let the defaults run, the more you start to notice what isn’t there.

You hear fewer unfamiliar voices. You see fewer edge cases. Posts feel shorter. Safer. More templated. There’s less surprise, less friction, less of the slow, messy honesty that makes a community feel alive.

People adjust. Not because they’re told to but because the system teaches them what works. What gets seen. What doesn’t.

Over time, even active users start shaving off the parts of themselves that don’t match the pattern. And that’s the cost.

You don’t just lose content. You lose contrast. You lose tension. You lose the people who brought something different because there was no signal telling the system they mattered. It’s not that anyone meant to optimise for sameness. It’s that no one meant not to.

The result isn’t a failure. It’s a slow flattening. One that doesn’t show up on a dashboard, but that changes the shape of participation all the same.

When the tools make things easier, the hard part is protecting what should still be hard: Disagreement. Vulnerability. Long, thoughtful posts that didn’t fit the model. People who speak a little differently. Or less often. Or from a place outside the usual circle.

Those are the signals most worth preserving. And they’re usually the first to go when you stop paying attention.

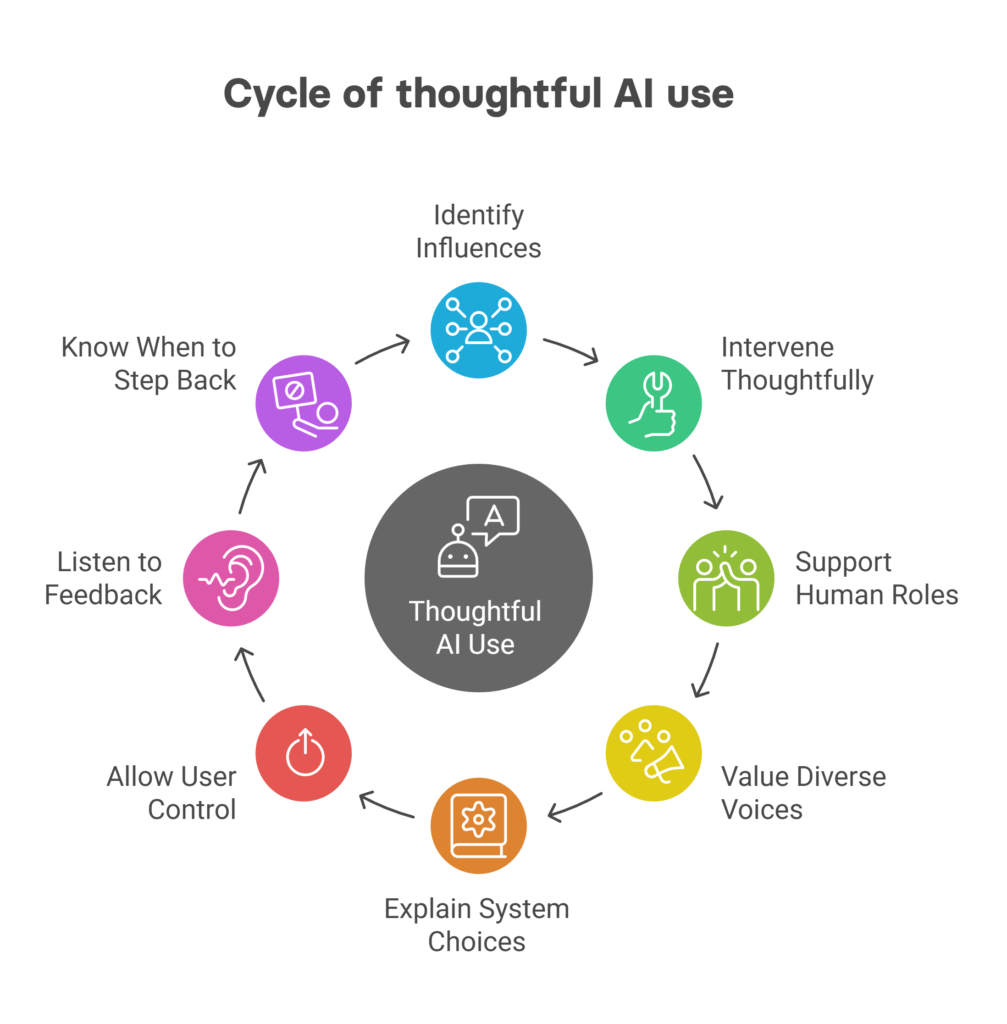

What thoughtful use actually looks like

You can’t just switch AI on and hope for the best. At some point, you have to ask: what exactly is it helping with? And what’s it shaping, even if we didn’t mean it to?

Thoughtful use doesn’t mean building the perfect system. It means noticing what’s already being influenced and choosing where to intervene. It means staying close to the human parts, especially the ones that don’t scale cleanly.

It looks like this:

- It means using automation to support curation, not replace it. Let the system help with surfacing posts but don’t hand it the job of deciding what matters. Give editors, moderators and community leads the last word, not because they’re perfect, but because they’re accountable.

- It means leaving room for what doesn’t perform. That one thread that no one replied to but still mattered. The member who shows up differently, who doesn’t fit the usual metrics. Design spaces where those voices aren’t pushed to the bottom just because they’re quiet.

- It means explaining how the system works, when things are recommended, when they’re flagged, when they’re ranked higher or lower. You don’t have to show the algorithm. You just have to show that choices were made.

- It means allowing people to step outside the loop. To browse chronologically. To turn things off. To slow down. Not everything has to be optimised in real time.

- It means listening before tuning. Noticing when people go quiet. When discussions stall. When replies sound the same. Sometimes that’s not a user problem, it’s a design outcome. And you only catch it if you’re looking for the quiet parts, not just the visible ones.

It also means knowing when not to use AI. Not because it’s bad but because some things still need to be handled by people.

Conflict. Complexity. Feedback that isn’t easily parsed.

Those are where trust gets built. And where systems should be designed to step back.

Why we’ve chosen to wait

tchop™ doesn’t have AI features right now. That’s not because we’re behind. It’s because we’re not in a hurry.

There are a dozen ways we could introduce AI into the platform tomorrow and we are experimenting with a lot of them. Smart tagging. Automated summaries. Personalised notifications. Predictive prompts. Most of them are useful. Some are even impressive. But usefulness was never the only test.

We’ve seen what happens when things are optimised too early. You lose the edges. You lose the quiet. You lose the stuff that doesn’t spike on a chart but still means something to the people who saw it.

Our platform is used for internal comms, closed communities, member groups, teams. Spaces where trust matters more than traffic. Where the real value isn’t in reach, but in rhythm. How often people show up, how they respond, what they feel safe saying.

That kind of space doesn’t need to be pushed. It needs to be held.

So we’ve been careful. We’re watching. Listening. Testing things quietly. But we’re not building around AI just because we can.

If and when we do introduce AI-driven features, they’ll follow a few basic rules:

- They’ll be transparent. If the system influences what’s shown, that influence will be visible.

- They’ll be configurable. Not every community needs the same signals, the same nudges, the same interventions.

- They’ll be supportive, not dominant. The job of AI will be to assist, not to shape the experience alone.

- And they’ll be designed to step back as easily as they step in.

Because we’re not building tchop™ for scale at all costs. We’re building it to be trusted. And sometimes, that means choosing what not to automate.

Choice that matters

Oren Etzioni said:

“AI is a tool. The choice about how it gets deployed is ours.”

That line gets quoted a lot. But it lands differently when you’re building something people return to, not because they have to, but because they want to.

The hardest part about that kind of space isn’t building features. It’s holding a shape. Holding a tone. Making sure that the decisions baked into the infrastructure reflect the values that aren’t always easy to measure.

Because trust doesn’t announce itself. It shows up slowly. Quietly. It shows up in how moderation is handled. In who gets nudged to return. In whether someone’s voice feels like it belongs, even when it doesn’t perform well.

When AI becomes part of the structure, it starts shaping those things in ways that are easy to overlook, until something starts to feel off.

That’s why the real choice isn’t about whether to use AI. It’s about how you use it, where it makes decisions and who gets considered in the process.

And if the answers to those questions aren’t clear, then maybe the most responsible thing for now is to wait.